Over the past year, we have been presented with a unique set of challenges. Living, and working, from home has been a challenge for us all, but it has most effectively stunted research projects. However, this was the perfect opportunity to test a machine meant for such a scenario. Farmbot gives us a sneak peek into the future of farming; fully automated and sustainable. These are imperative steps towards increasing the availability of fresh produce, cutting the effects of climate change, plastic packaging, pesticide use, and carbon emissions that continue to pollute the earth and the food we eat. Even though setting up FarmBot itself proved an arduous task, the final result provides a sustainable farming method allowing for the automated data collection and maintenance of plants.

Over the past year, we have been presented with a unique set of challenges. Living, and working, from home has been a challenge for us all, but it has most effectively stunted research projects. However, this was the perfect opportunity to test a machine meant for such a scenario. Farmbot gives us a sneak peek into the future of farming; fully automated and sustainable. These are imperative steps towards increasing the availability of fresh produce, cutting the effects of climate change, plastic packaging, pesticide use, and carbon emissions that continue to pollute the earth and the food we eat. Even though setting up FarmBot itself proved an arduous task, the final result provides a sustainable farming method allowing for the automated data collection and maintenance of plants.

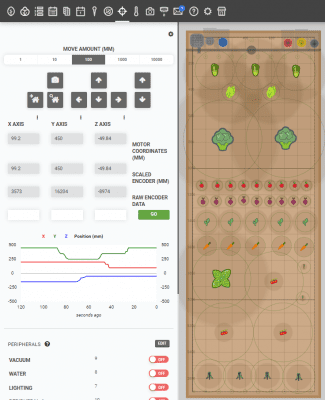

FarmBot is an autonomous open-source computer numerical control (CNC) farming robot that prioritizes sustainability and optimizes modern farming techniques. Using computer numerical control, FarmBot can accurately and repeatedly conduct experiments with no human input and therefore, very little error. We can write sequences, plan regimens and events to collect data 24/7 in addition to monitoring the system remotely. This allows us to plan as many plants, crops, inputs, and operations as needed. Reducing cost and increasing sustainable farming is a priority of FarmBot.

The FarmBot Genesis features a gantry mounted on tracks attached to the sides of a raised garden bed. The tracks create a great level of precision because the garden bed is represented as a grid in which plant locations and tools can have specific coordinates, therefore allowing endless customization of your garden. The gantry bridges both sides of the track and uses a belt and pulley drive system and V-wheels to move along the X-axis (the tracks) and the Y-axis (the gantry). The cross-slide that controls the Y-axis also utilizes a leadscrew to move the Z-axis extrusion, allowing for up and down movement as well.

Movement is powered by four NEMA 17 stepper motors including rotary encoders to monitor relative motion. The rotary encoders are monitored by a dedicated processor in the custom Farmduino electronics board, which utilizes a Raspberry Pi 3 as the “brain”. The Farmduino v1.5 system has several useful features including built-in stall detection for the motors. Stainless steel and aluminum hardware makes the machine resistant to corrosion, making this system safe for long-term use outdoors.

The FarmBot Genesis model also includes several tools made of UV-resistant ABS plastic that are interchanged using the Universal Tool Mount (UTM) on the Z-axis extrusion. The UTM utilizes 12 electrical connections, three liquid/gas lines, and magnetic coupling to mount and change tools with ease, allowing for automation at nearly every step of the planting and growing process. Sowing seeds without any human intervention is possible with the vacuum-powered seed injector tool, which is compatible with three different-sized needles to accommodate seeds of varying sizes. Once planting has been completed, regimens can be set up to water plants on a schedule using the watering nozzle. The attachment is coupled with a solenoid valve to control the flow of water, ensuring that each plant receives as much moisture as they need. The soil sensor tool takes the automation of watering a step further by detecting the moisture of the soil and using the collected data to modify the amount of water dispensed as needed. A customizable weeding tool uses spikes to push young weeds into the soil before they become an issue. FarmBot uses a built-in waterproof camera to detect weeds and take photos of plants to track growth. All of these tools and features create a completely customizable farming experience without the worry of human error.

FarmBot operates using a 100% open-source operating system and web app. In the web app, we can easily control, configure, create customizable sequences and routines all with a drag-and-drop farm designer and block code format. From here we are able to receive all information regarding the positioning, tools, and plants within the garden bed. FarmBot’s Raspberry Pi uses FarmBot OS to communicate and stay synchronized with the web app allowing it to download and execute scheduled events, be controlled in real-time, and upload logs and sensor data.

We faced many ups and downs during the hardware and software phases of FarmBot. From vague reference docs, manufacturing defects, hardware failures, and network security problems, this was an in-depth and at times very frustrating project. However, we didn’t want an easy project. The challenges we faced when building FarmBot, as annoying it was to debug at the time, helped us gain a great understanding of how this machine works. Through all the blood, sweat, and tears (literally), we learned more from this project than we ever could have imagined. From woodworking to circuity to programming and botany, we tackled a wide range of issues. But that’s what made this project so worth it. Many times we had to resort to out-of-the-box thinking to resolve issues with some of the limited components we had. Working as a team also allowed us to bounce ideas off one another while each bringing our own unique talents to the team. Nicolas has a background in Computer Science which helped with programming FarmBot as well as resolving software and networking issues that occurred. Hannah’s major is Biological Sciences and she has experience with gardening, which helped in the plant science aspect of the project on top of overall planning and construction. As an electrical engineering major, Nicholas helped with the building process, wiring and his input was critical in resolving issues of such an advanced electromechanical system. While we recognize FarmBot’s shortcomings, it was an incredible learning experience and an imperative building block towards a more sustainable and eco-friendly future.

Moving forward, we plan on modifying FarmBot to include a webcam, rain barrel, and solar panels. A webcam will give us a live feed of the FarmBot to allow live remote monitoring as well as enable us to take photos for timelapse photography. Rain barrels can be used to collect rainwater which can be recycled and act as FarmBots water source, further increasing its sustainability. Solar panels will provide a dedicated, off-grid solar energy system helping further reduce the cost and carbon emissions associated with running FarmBot. We also currently have an MIS capstone team developing ways to pull real-time data for future analysis and dataset usage in other academic settings.

Be on the lookout for future FarmBot updates and be free to reach out to opiminnovate@uconn.edu for more information.

By: Nicholas Satta, Hannah Meikle, Nicolas Michel

Wearable biometric technology is currently revolutionizing healthcare and consumer electronics. Internet-enabled pacemakers, smart watches with heart rate sensors and all manner of medical equipment now merge health with convenience. This is all a step in the right direction, but not quite the end goal. In fact, it seems that we’re past the point where technology can fix all the problems we’ve created. If this is the case then, at the very least, I think we can have a bit of fun while we’re still alive. Let us not mince words: We’re headed straight for a global environmental collapse, and I propose we go out in style.

Wearable biometric technology is currently revolutionizing healthcare and consumer electronics. Internet-enabled pacemakers, smart watches with heart rate sensors and all manner of medical equipment now merge health with convenience. This is all a step in the right direction, but not quite the end goal. In fact, it seems that we’re past the point where technology can fix all the problems we’ve created. If this is the case then, at the very least, I think we can have a bit of fun while we’re still alive. Let us not mince words: We’re headed straight for a global environmental collapse, and I propose we go out in style.

For this reason, I’ve designed the Heart Rate Hat. This headwear is simultaneously a fashion statement, a demonstration of the capabilities of biometric sensors and the first step to the creation of a fully functioning cyborg. The Heart Rate Hat is exactly what I need to tie together my vision for a cyberpunk dystopia. Combined with other gear from the lab, such as our EEG headset and our electromyography equipment, we could raise some very interesting questions about what kinds of biometric data we actually want our technology to collect. We could also explore the consequences of being able to quantify human emotion and the use of such data to make predictions. What once was the realm of science fiction, we are now turning into reality.

The design of the device is relatively simple. I used the FLORA (a wearable, Arduino-compatible microcontroller) as the brain of the device, a Pulse Sensor to read heart rate data from the user’s ear lobe and several NeoPixel LED sequins for output. The speed at which the LEDs blink is determined by heart rate and the color is determined by heart rate variance. In the process of designing this, I learned how to work with conductive thread, how to program addressable LEDs and how to read and interpret heart rate and heart variance data from a sensor. As these are all important skills for wearable electronics prototyping, a similar project may be viable as an introduction to wearable product design. Going forward, I would like to explore other options for visualizing output from biometric sensors. I may work on outputting data from such a device to a computer via Bluetooth, incorporate additional sensors into the design and log the data to be used for analysis.

The design of the device is relatively simple. I used the FLORA (a wearable, Arduino-compatible microcontroller) as the brain of the device, a Pulse Sensor to read heart rate data from the user’s ear lobe and several NeoPixel LED sequins for output. The speed at which the LEDs blink is determined by heart rate and the color is determined by heart rate variance. In the process of designing this, I learned how to work with conductive thread, how to program addressable LEDs and how to read and interpret heart rate and heart variance data from a sensor. As these are all important skills for wearable electronics prototyping, a similar project may be viable as an introduction to wearable product design. Going forward, I would like to explore other options for visualizing output from biometric sensors. I may work on outputting data from such a device to a computer via Bluetooth, incorporate additional sensors into the design and log the data to be used for analysis.

Written By: Eli Udler

Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

For this project, I wanted to use AI to show how it can integrate with other innovative technologies in the lab. I went with object detection because its applications in engineering rely on micro-controllers, data analytics, and the internet of things. For example, a self driving car needs to be able to tell where the road is or if a person is up ahead. A task such as this simply wouldn’t function well without each component. Looking into the future, we will need more innovative ways to identify things whether it be for a car or for security surveillance.

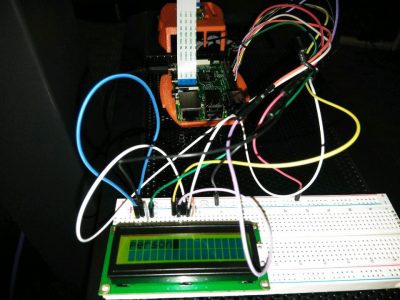

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

By: Robert McClardy

This past semester, I participated in OPIM 4895: An Introduction to Industrial IoT, a course that brings data analytics and Splunk technology to the University’s Spring Valley student farm. In this course, I learned how to deploy sensors and data analytics to monitor real-time conditions in the greenhouse in order to practice sustainable farming and aquaponics. The sensors tracked data for pH, oxygen levels, water temperature, and air temperature which was then analyzed through Splunk. At the greenhouse, we were able to visualize the results of this data in real time at the greenhouse using an augmented reality system with QR codes through Splunk technology. We were also able to monitor this data remotely through Apple TV Dashboards in the OPIM Innovate Lab on campus.

As a senior who is graduating this upcoming December, I appreciated the opportunity to have hands on experience working with emerging technology. Learning tangible skills is critical to students who plan on entering the workforce, especially in the world of technology. The Industrial IoT course has been one of my favorite courses of my undergraduate career as a student at UConn. I believe this is largely because it has significantly strengthened my technical skills through interactive learning, working closely with other students and faculty, and traveling on-site to the greenhouse. Using Splunk to analyze our own data that was produced by sensors that we deployed at the farm is a perfect example of experiential learning.

By: Radhika Kanaskar

Nowadays programs made by companies like Apple and Facebook are capable of making software that can unlock your phone or tag you in a photo automatically using facial recognition. Facial recognition first attracted me because of the incredible ability it had to translate a few lines of code into a recognition that mimics the human eye. How can this technology be helpful in the world of business? My first thought was in cyber security. Although I merely had one semester, I wanted to be able to use video to recognize faces. My first attempt used a raspberry pi as the central controller. Raspberry pis are portable, affordable, and familiar because they are used throughout the OPIM Innovation Space. There is also a camera attachment which I thought made it perfect for my project. After realizing that the program I attempted to develop used too much memory, I moved into using Python, a programming language, on my own laptop.

Nowadays programs made by companies like Apple and Facebook are capable of making software that can unlock your phone or tag you in a photo automatically using facial recognition. Facial recognition first attracted me because of the incredible ability it had to translate a few lines of code into a recognition that mimics the human eye. How can this technology be helpful in the world of business? My first thought was in cyber security. Although I merely had one semester, I wanted to be able to use video to recognize faces. My first attempt used a raspberry pi as the central controller. Raspberry pis are portable, affordable, and familiar because they are used throughout the OPIM Innovation Space. There is also a camera attachment which I thought made it perfect for my project. After realizing that the program I attempted to develop used too much memory, I moved into using Python, a programming language, on my own laptop.

Installing Python was a challenge in itself, and I was writing code in a language I had never used before. Eventually I was able to get basic scripts to execute on my computer, and then I could install and connect Python to the OpenCV library. OpenCV is a database that used stored facial recognition data to quickly run face recognition programs in Python. The setup was really difficult; if a single file location was not exactly specified, or had moved by accident, then the entire library of openCV wouldn’t be accessible from Python. It was these programs that taught me how to take live video feed and greyscale it, so the computer wasn’t overloaded with information. Then I identified uniform perimeters of nearby objects in the video, traced them with the program using a rectangular, and used database files developed by INTEL to recognize if those objects were the face of a person. The final product was really amazing. The Python script ran by using OpenCV to reference a large .xml file which held the data on how to identify a face. The program refreshed real time, and as I looked into my live feed camera I watched the rectangular box follow around the contour of my face. I was amazed by how much effort and such complex code it took to carry out a seemingly simple task. Based on my experience with the project, I doubt that Skynet or any other sentient robots are going to be smart enough to take over the world anytime soon. In the meantime, I can’t wait to see how computer vision technology will make the world a better place to live in.

By: Evan Gentile, Senior MEM Major