Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

For this project, I wanted to use AI to show how it can integrate with other innovative technologies in the lab. I went with object detection because its applications in engineering rely on micro-controllers, data analytics, and the internet of things. For example, a self driving car needs to be able to tell where the road is or if a person is up ahead. A task such as this simply wouldn’t function well without each component. Looking into the future, we will need more innovative ways to identify things whether it be for a car or for security surveillance.

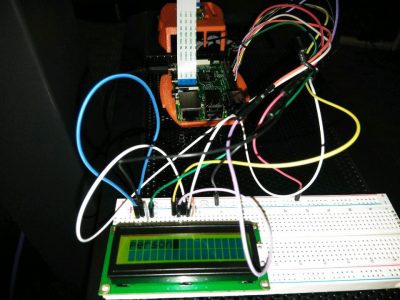

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

By: Robert McClardy

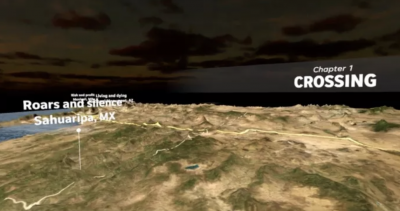

Virtual Reality is a new set of technologies that has the ability to accomplish educational, business, and recreational feats never seen before. If you would have told somebody 50 years ago that they could climb and explore Mount Everest from the safety and comfort of their living room, they would not have believed it. As Mark Zuckerberg once said, “Virtual reality was once the dream of science fiction, but the internet was also once a dream, and so were computers and smartphones. The future is coming and we have a chance to build it together.” In OPIM Innovate, I recently had the opportunity to explore this past dream of science fiction with a Latino and Latin American Studies class: LLAS3998 –

Virtual Reality is a new set of technologies that has the ability to accomplish educational, business, and recreational feats never seen before. If you would have told somebody 50 years ago that they could climb and explore Mount Everest from the safety and comfort of their living room, they would not have believed it. As Mark Zuckerberg once said, “Virtual reality was once the dream of science fiction, but the internet was also once a dream, and so were computers and smartphones. The future is coming and we have a chance to build it together.” In OPIM Innovate, I recently had the opportunity to explore this past dream of science fiction with a Latino and Latin American Studies class: LLAS3998 –  One of the most used features of the OPIM Innovate lab is the Fused Deposition Modeling (FDM) 3D printer. We are always interested in new innovations in 3D printing and what students like to use the 3D printers for. We were lucky to have a chance to show a big name company upcoming technologies we had in the space.

One of the most used features of the OPIM Innovate lab is the Fused Deposition Modeling (FDM) 3D printer. We are always interested in new innovations in 3D printing and what students like to use the 3D printers for. We were lucky to have a chance to show a big name company upcoming technologies we had in the space. I have been using CAD to make different models and designs since I was in high school. It’s so satisfying to make different parts in a program that you can then bring to life with 3D Printing. In the OPIM Innovation Space, several 3D printers have some really special capabilities and I wanted to put my skills as a designer, and the abilities of the printer, to the test.

I have been using CAD to make different models and designs since I was in high school. It’s so satisfying to make different parts in a program that you can then bring to life with 3D Printing. In the OPIM Innovation Space, several 3D printers have some really special capabilities and I wanted to put my skills as a designer, and the abilities of the printer, to the test.

Nowadays programs made by companies like Apple and Facebook are capable of making software that can unlock your phone or tag you in a photo automatically using facial recognition. Facial recognition first attracted me because of the incredible ability it had to translate a few lines of code into a recognition that mimics the human eye. How can this technology be helpful in the world of business? My first thought was in cyber security. Although I merely had one semester, I wanted to be able to use video to recognize faces. My first attempt used a raspberry pi as the central controller. Raspberry pis are portable, affordable, and familiar because they are used throughout the OPIM Innovation Space. There is also a camera attachment which I thought made it perfect for my project. After realizing that the program I attempted to develop used too much memory, I moved into using Python, a programming language, on my own laptop.

Nowadays programs made by companies like Apple and Facebook are capable of making software that can unlock your phone or tag you in a photo automatically using facial recognition. Facial recognition first attracted me because of the incredible ability it had to translate a few lines of code into a recognition that mimics the human eye. How can this technology be helpful in the world of business? My first thought was in cyber security. Although I merely had one semester, I wanted to be able to use video to recognize faces. My first attempt used a raspberry pi as the central controller. Raspberry pis are portable, affordable, and familiar because they are used throughout the OPIM Innovation Space. There is also a camera attachment which I thought made it perfect for my project. After realizing that the program I attempted to develop used too much memory, I moved into using Python, a programming language, on my own laptop.

Before the introduction of Apple’s “Siri” in 2010, Artificial Intelligence voice assistants were no more than science fiction. Fast forward to today, and you will find them everywhere from in your phone helping you navigate your contacts and calendar, to in your home helping you around the house. Each smart assistant has its pros and cons, and everyone has their favorite assistant. Over the last few years I have really enjoyed working with Amazon’s Alexa smart assistant. I began working with Alexa during my summer internship at Travelers in 2016. I attended a “build night” after work where we learned how to start developing with Amazon Web Services and the Alexa platform. Since then, I’ve developed six different skills and received Amazon hoodies, t-shirts, and Echo Dots for my work.

Before the introduction of Apple’s “Siri” in 2010, Artificial Intelligence voice assistants were no more than science fiction. Fast forward to today, and you will find them everywhere from in your phone helping you navigate your contacts and calendar, to in your home helping you around the house. Each smart assistant has its pros and cons, and everyone has their favorite assistant. Over the last few years I have really enjoyed working with Amazon’s Alexa smart assistant. I began working with Alexa during my summer internship at Travelers in 2016. I attended a “build night” after work where we learned how to start developing with Amazon Web Services and the Alexa platform. Since then, I’ve developed six different skills and received Amazon hoodies, t-shirts, and Echo Dots for my work.