New SAS JMP projects are coming soon!

Projects

COMING SOON

New Tableau projects are coming soon!

COMING SOON

New Drone projects are underway!

COMING SOON

New Blockchain projects are underway!

COMING SOON

New augmented reality projects are underway!

The Heart Rate Hat

Wearable biometric technology is currently revolutionizing healthcare and consumer electronics. Internet-enabled pacemakers, smart watches with heart rate sensors and all manner of medical equipment now merge health with convenience. This is all a step in the right direction, but not quite the end goal. In fact, it seems that we’re past the point where technology can fix all the problems we’ve created. If this is the case then, at the very least, I think we can have a bit of fun while we’re still alive. Let us not mince words: We’re headed straight for a global environmental collapse, and I propose we go out in style.

Wearable biometric technology is currently revolutionizing healthcare and consumer electronics. Internet-enabled pacemakers, smart watches with heart rate sensors and all manner of medical equipment now merge health with convenience. This is all a step in the right direction, but not quite the end goal. In fact, it seems that we’re past the point where technology can fix all the problems we’ve created. If this is the case then, at the very least, I think we can have a bit of fun while we’re still alive. Let us not mince words: We’re headed straight for a global environmental collapse, and I propose we go out in style.

For this reason, I’ve designed the Heart Rate Hat. This headwear is simultaneously a fashion statement, a demonstration of the capabilities of biometric sensors and the first step to the creation of a fully functioning cyborg. The Heart Rate Hat is exactly what I need to tie together my vision for a cyberpunk dystopia. Combined with other gear from the lab, such as our EEG headset and our electromyography equipment, we could raise some very interesting questions about what kinds of biometric data we actually want our technology to collect. We could also explore the consequences of being able to quantify human emotion and the use of such data to make predictions. What once was the realm of science fiction, we are now turning into reality.

The design of the device is relatively simple. I used the FLORA (a wearable, Arduino-compatible microcontroller) as the brain of the device, a Pulse Sensor to read heart rate data from the user’s ear lobe and several NeoPixel LED sequins for output. The speed at which the LEDs blink is determined by heart rate and the color is determined by heart rate variance. In the process of designing this, I learned how to work with conductive thread, how to program addressable LEDs and how to read and interpret heart rate and heart variance data from a sensor. As these are all important skills for wearable electronics prototyping, a similar project may be viable as an introduction to wearable product design. Going forward, I would like to explore other options for visualizing output from biometric sensors. I may work on outputting data from such a device to a computer via Bluetooth, incorporate additional sensors into the design and log the data to be used for analysis.

The design of the device is relatively simple. I used the FLORA (a wearable, Arduino-compatible microcontroller) as the brain of the device, a Pulse Sensor to read heart rate data from the user’s ear lobe and several NeoPixel LED sequins for output. The speed at which the LEDs blink is determined by heart rate and the color is determined by heart rate variance. In the process of designing this, I learned how to work with conductive thread, how to program addressable LEDs and how to read and interpret heart rate and heart variance data from a sensor. As these are all important skills for wearable electronics prototyping, a similar project may be viable as an introduction to wearable product design. Going forward, I would like to explore other options for visualizing output from biometric sensors. I may work on outputting data from such a device to a computer via Bluetooth, incorporate additional sensors into the design and log the data to be used for analysis.

Written By: Eli Udler

K-Cup Holder for the UConn Writing Center

One of the most amazing things about 3D printing is the speed at which an idea becomes a design. With the growing prevalence of this technology, the time between thinking up an object that you would like to exist and seeing it constructed continues to decrease. The thought of turning something I dreamed up into a reality was my primary inspiration for this project: a sculpture of the UConn Writing Center logo that doubles up as a K-Cup holder.

I was excited to find out that a Writing Center tutor was kind enough to donate a Keurig to the office, putting lifegiving caffeine in the hands of tutors without the cost of running down to Bookworms Cafe. Alas, it was disturbing to see that the K-Cups used by the machine were being stored in a small basket. Now, I’m not the Queen of England or anything, but I have my limits. The toll on my mental health taken by watching the cups lazily thrown into a pile in the woven container was enough to force me to take action. With less than half an hour of active work, I was able to turn the Writing Center logo, a stylized “W”, into a three-dimensional model complete with holes designed to hold K-Cups.

I was excited to find out that a Writing Center tutor was kind enough to donate a Keurig to the office, putting lifegiving caffeine in the hands of tutors without the cost of running down to Bookworms Cafe. Alas, it was disturbing to see that the K-Cups used by the machine were being stored in a small basket. Now, I’m not the Queen of England or anything, but I have my limits. The toll on my mental health taken by watching the cups lazily thrown into a pile in the woven container was enough to force me to take action. With less than half an hour of active work, I was able to turn the Writing Center logo, a stylized “W”, into a three-dimensional model complete with holes designed to hold K-Cups.

But there’s another reason I decided to turn my strange idea into a reality: I wanted to highlight the range of resources offered on campus to UConn students. The OPIM Innovate space and the Writing

But there’s another reason I decided to turn my strange idea into a reality: I wanted to highlight the range of resources offered on campus to UConn students. The OPIM Innovate space and the Writing

Center aren’t so different, really. While the Writing Center can assist students with their writing in a variety of disciplines, Innovate provides a range of tech kits that teach students about emerging

technologies. Both are spaces outside the classroom where students can learn relevant skills, regardless of their majors. Most importantly, perhaps, both were kind enough to hire me.

The print currently resides in the Writing Center office where tutors can sit down, enjoy a cup of freshly brewed coffee from a large sculpture of a W and savor the bold taste of interdisciplinary collaboration.

Written by: Eli Udler

AI Recognition

Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

Artificial Intelligence is an interesting field that has become more and more integral to our daily lives. Its applications can be seen from facial recognition, recommendation systems, and automated client services. Such tasks make life a lot simpler, but are quite complex in and of themselves. Nonetheless, these tasks rely on machine learning, which is how computers develop ways to recognize patterns. These patterns require loads of data however so that computers can be accurate. Luckily in this day and age, there is a variety of data to train from and a variety of problems to tackle.

For this project, I wanted to use AI to show how it can integrate with other innovative technologies in the lab. I went with object detection because its applications in engineering rely on micro-controllers, data analytics, and the internet of things. For example, a self driving car needs to be able to tell where the road is or if a person is up ahead. A task such as this simply wouldn’t function well without each component. Looking into the future, we will need more innovative ways to identify things whether it be for a car or for security surveillance.

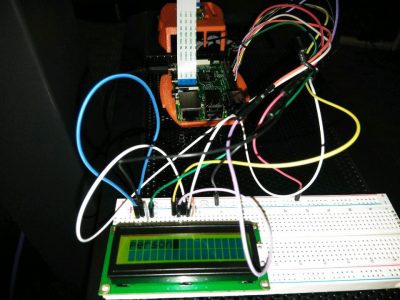

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

When I first started working in the Innovate Lab, I saw the LCD plate in one of the cabinet drawers and wanted to know how it worked. I was fascinated with its potential and decided to focus my project around the plate. The project itself was to have a Raspberry Pi recognize an object and output the label onto an LCD plate. The first aspect, recognizing objects, wasn’t too hard to create. Online there are multiple models that are already trained for public use. These pre-trained models tell the computer the algorithm to recognize a certain object. The challenge came with the installation of all the programs required to use the model and to run the script. Afterwards, outputting the text onto the plate simply required me to wire the plate to the pi. The need for the plate was to show the results otherwise they’d have to plug in a monitor, but other alternatives could have been used as well. For example, the results could have been sent online to a web application or they could have been stored in a file on the pi. So far the results haven’t been always accurate, but that just leaves room for improvement. I am hoping that soon I will be able to run the detection script on data that has been streamed to the pi. Overall, I gained a better understanding of the applications of AI and engineering. This was only one of the many capabilities of AI and there is still so much more to try.

By: Robert McClardy

An Introduction to Industrial IoT

This past semester, I participated in OPIM 4895: An Introduction to Industrial IoT, a course that brings data analytics and Splunk technology to the University’s Spring Valley student farm. In this course, I learned how to deploy sensors and data analytics to monitor real-time conditions in the greenhouse in order to practice sustainable farming and aquaponics. The sensors tracked data for pH, oxygen levels, water temperature, and air temperature which was then analyzed through Splunk. At the greenhouse, we were able to visualize the results of this data in real time at the greenhouse using an augmented reality system with QR codes through Splunk technology. We were also able to monitor this data remotely through Apple TV Dashboards in the OPIM Innovate Lab on campus.

As a senior who is graduating this upcoming December, I appreciated the opportunity to have hands on experience working with emerging technology. Learning tangible skills is critical to students who plan on entering the workforce, especially in the world of technology. The Industrial IoT course has been one of my favorite courses of my undergraduate career as a student at UConn. I believe this is largely because it has significantly strengthened my technical skills through interactive learning, working closely with other students and faculty, and traveling on-site to the greenhouse. Using Splunk to analyze our own data that was produced by sensors that we deployed at the farm is a perfect example of experiential learning.

By: Radhika Kanaskar

Immigration and Virtual Reality

Virtual Reality is a new set of technologies that has the ability to accomplish educational, business, and recreational feats never seen before. If you would have told somebody 50 years ago that they could climb and explore Mount Everest from the safety and comfort of their living room, they would not have believed it. As Mark Zuckerberg once said, “Virtual reality was once the dream of science fiction, but the internet was also once a dream, and so were computers and smartphones. The future is coming and we have a chance to build it together.” In OPIM Innovate, I recently had the opportunity to explore this past dream of science fiction with a Latino and Latin American Studies class: LLAS3998 – Human Rights on the US/Mexican Border: Narratives of Immigration.

Virtual Reality is a new set of technologies that has the ability to accomplish educational, business, and recreational feats never seen before. If you would have told somebody 50 years ago that they could climb and explore Mount Everest from the safety and comfort of their living room, they would not have believed it. As Mark Zuckerberg once said, “Virtual reality was once the dream of science fiction, but the internet was also once a dream, and so were computers and smartphones. The future is coming and we have a chance to build it together.” In OPIM Innovate, I recently had the opportunity to explore this past dream of science fiction with a Latino and Latin American Studies class: LLAS3998 – Human Rights on the US/Mexican Border: Narratives of Immigration.

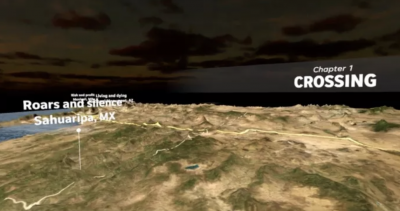

The Lab was coordinated by Anne Gebelein, a UConn professor who wanted to expose her class to narratives in public policy including the United States border, immigration, and other Latin American topics. Gebelein had realized that the usage of a new educational medium like Virtual Reality could allow her class a broader and much more meaningful experience while learning these challenging topics. In working with the Innovate team, I was able to construct a curriculum containing learning materials for a specially designed VR workshop: Creating Empathy for Human Rights. We made use of the HTC Vive virtual reality system as well as a set of Google Cardboards to facilitate this event.

One of the experiences showcased was a virtual reality environment created by USA Today known as The Wall. This Vive-only application allowed students to explore various parts of the USA-Mexico border wall as it currently exists today. The vast size and land area covered by the border certainly surprised many students. In further exploring the topic of human rights, students also investigated a solitary confinement VR experience that placed them in the shoes of an immigration detainee. Several students commented on the gravity and sheer discomfort they felt when they entered this environment.

Overall, VR has certainly begun to prove itself a viable technology for a new style of practical teaching and learning. Throughout the course of this workshop, the LLAS class and Professor Gebelein were exposed to environments that they never could have entered through traditional teaching methods. I was very pleased that this workshop became a resounding success and we hope to further develop our materials and assist with more activities like this in the future.

By: Thomas Hannon